This article will explain the relationship between the F-value, F-ratio, F-test, F-statistic, and F-distribution as they relate to the use of Analysis of Variance (ANOVA). We will also explain the importance of using the F-value, describe an industry example, and provide some tips on how to properly use the F-value.

Overview: What is the F-value in the context of ANOVA?

We need to start off by describing what we mean by ANOVA. ANOVA is a statistical hypothesis test that allows you to determine whether there is a statistical difference between the means of three or more groups. While other hypothesis tests for differences in means directly use the data means (T-test), ANOVA is unique in that it uses a sum of squares approach similar to variance to determine whether means are statistically different or not.

ANOVA looks at three sources of variability:

- Total: Total variability among all observations

- Between: Variation between subgroup means (signal)

- Within: Random (chance) variation within each subgroup (noise)

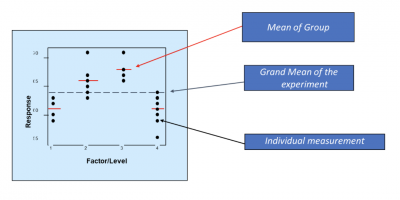

The F-value is the ratio (F-ratio) of the Between and Within variation. Below is a graphic showing how the two variations are determined.

The Between variation is calculated by summing the squared differences between the group means and the grand mean. The Within variation is calculated by summing the squares of the difference between the individual values and the group mean.

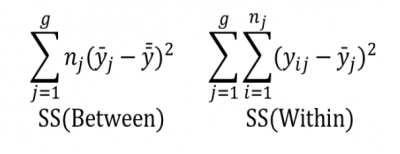

Here are the calculations:

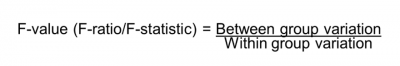

The Between (Factor) and Within (Error) variation are now put in the form of a ratio. This ratio is called the F-ratio, and the resulting value of that ratio is called the F-value.

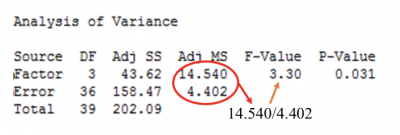

The F-value and the calculations are typically represented in an ANOVA table as shown below:

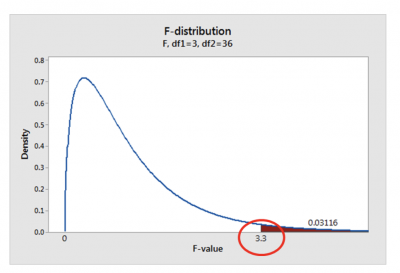

Now that you understand what the F-value is and how it is calculated, you might be wondering how to use the F-value to make a decision about the means of your groups. Conceptually, the F-value is now overlaid on the F distribution, as shown below.

The number to the right (0.03116) is the p-value and provides guidance whether to reject or fail to reject the null hypothesis (Ho) of ANOVA. The Ho is that all group means are statistically equal. The alternate hypothesis (Ha) is that at least one group mean is different.

Using an alpha value of 0.05, you will reject the null and claim there is a difference in the means of your groups. This assumes you have fulfilled the assumptions of the ANOVA test, especially that the variances of the groups are equal.

3 benefits of the F-value

Here are some of the key benefits of using the F-value to make decisions about your group means.

1. Simple ratio

The F-value is a ratio of two sum of squares calculations. The resulting value is used to determine what action to take with respect to the null hypothesis.

2. Statistical based decision making

It is easy to determine whether multiple group means are mathematically different. But mathematical difference does not indicate whether that difference is a real difference or just random variation.

3. Software

Most statistical software today do all the necessary calculations of sum of squares, F-value and p-value. This is usually presented in an ANOVA table for easy understanding and interpretation.

Why is the F-value important to understand?

The F-value is a popular and powerful statistical tool for making decisions regarding differences in group means. It is important to understand for the following reasons.

Based on group sum of squares, not direct group means

It might be counter-intuitive but you can use variances to make decisions about means.

ANOVA assumptions

For the F-value and p-value to have meaning, you must adhere to the assumptions of ANOVA – particularly that the variances of the different groups should be equal.

Interpretation of the F-value

ANOVA uses the F-value to determine whether the between group variability of means is larger than the within the group variability of the individual values. If the ratio of between group and within group variation is sufficiently large, you can conclude that not all the means are equal.

An industry example of the F-value

A plant manager is trying to determine if there is any true difference between the productivity of his three manufacturing shifts. He has collected data based on the three shifts at the plant.

His LSS Black Belt, Travis, volunteered to do an ANOVA test to see if there was any significant difference in the mean production of the three shifts.

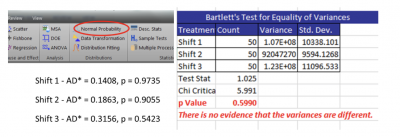

Travis tested the two ANOVA assumptions of normality of the three data sets using the Anderson Darling normality test and the equality of variances using the Bartlett test. Both assumptions were fulfilled.

See the results below.

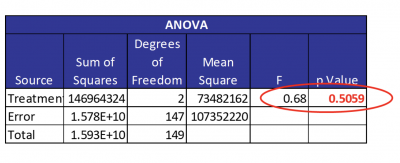

Note that all the p-values are higher than an alpha of 0.05, so you can state that the three data sets are not different than normal. You can also see that the three variances are equal. Travis then ran the ANOVA using a statistical software package. The results are presented below.

With a small F-value of 0.68 and a large p-value of 0.5059 Travis concluded there is no difference in the production means of the three shifts.

3 best practices when thinking about the F-value

While your statistical software will do all the calculations, there are some things you can do to be sure your results are meaningful

1. Test all assumptions of ANOVA

The two primary assumptions of ANOVA are that each group of data needs to be normally distributed, and the variance of the groups needs to be equal. If the assumptions are seriously violated, you may need to use a non-parametric test to determine whether your groups are statistically different.

2. Select an appropriate alpha value

Depending on the question you are trying to answer, you can select alpha values of 0.01, 0.05, 0.10 or any other reasonable value.

3. Do a Measurement System Analysis (MSA)

Unless you are confident in the ability of your measurement system to collect valid data, your results may be questionable.

Frequently Asked Questions (FAQ) about the F-value

1. Where did the term F-value come from?

References to F in statistics are named after Sir Ronald Fisher, a British mathematician, statistician, geneticist, and academic.

2. What statistical analysis can the F-value be used for?

The F-value or F-test is used primarily in ANOVA and regression.

3. How do you interpret a large F-value?

The F-value is the ratio of your between group variation and within group variation. A large F-value means the between-group variation is larger than your within-group variation. This can be interpreted to mean there is a statistically significant difference in your group means.

F-value recap

The F-value is the result of an ANOVA hypothesis test for determining whether there is a statistically significant difference in the means of three or more groups of data. The F-value is the ratio of the sum of squares for the difference between each group mean and the grand mean (between variation) and the sum of squares for the difference of the individual values and the group mean (within variation).

It can be described as the ratio of the between variation (signal) versus the within variation (noise) of your data. A large F-value means your signal or differences in means is greater than would be expected by random chance. Statistical software is used to do the calculations.