Robotic process automation (RPA), commonly referred to as “bots,” is a type of software that can mimic human interactions across multiple systems to bridge gaps in processes that previously had to be handled manually. RPA software applications can be integrated with other advanced technologies such as machine learning or artificial intelligence. But at the most basic level, they act like super-macros following a detailed script to complete standardized tasks that do not require the application of judgment.

Why Combine RPA and Lean Six Sigma?

Replacing manual work with bots removes the possibility of human error, reduces rework and quality checks, while also increasing accuracy. Bots can work much faster than humans and at any hour of the day so long as the underlying systems are operational. The potential to reduce overhead costs and increase process cycle time is vast. Bots also provide enhanced controls for risk avoidance.

Bots can serve as a foot in the door to gain traction for a quality program. Senior level executives get excited by the potential of this relatively affordable technology. By incorporating a thoughtful Lean Six Sigma (LSS) process review into a company’s bot deployment strategy, quality programs will gain additional visibility and leadership support.

Effective Bot Deployment at Edward Jones

Edward Jones is a financial services firm serving more than 7 million clients in the US and Canada. Their operations division began exploring RPA in 2017 and subsequently implemented their first bot into production in November 2018. Since then, they’ve deployed 17 additional bots, yielding 15 full-time employees in capacity savings, which in turn generated more than a million dollars in cost avoidance. While still at an early stage in this journey, the operations division has developed a structured approach using LSS tools to assess process readiness for automation, minimize or remove non-value-added work steps prior to development (abandonment), and redesign the process to fully leverage the benefits of RPA.

LSS Process Review

Using a questionnaire to begin their intake process, business areas submit critical data regarding process volumes, capacity needs, system utilization and risk level. This data feeds into a prioritization matrix that allows them to decide where to focus energy and time. Once a process is identified for RPA, a member of the quality team engages the business area for a LSS process review using familiar tools such as a project charter, stakeholder analysis, SIPOC (suppliers, input, process, outputs, customers) and process maps.

After thoroughly understanding the process’s current state, the practitioner and corresponding business area redesign the process for robotics. Next, they complete an FMEA (failure means and effect analysis) and business continuity plan to ensure process risk is being adequately controlled. After this LSS process review has concluded, a broad group of experts – including robotics developers, internal audit staff, risk leaders and senior leadership from all impacted business areas – are brought together to jointly review the robotics proposal and agree on a go/no-go decision.

A critical component of this process review is thorough documentation of every step along the way. Using an Excel playbook to organize all the tools in one place enables a smooth transition as the effort moves from the quality team to the robotics development team. Then, this comprehensive documentation is retained by the business area for ongoing maintenance. Specific elements of this documentation include a systems inventory, a record of all sign-off dates and approvals and a business continuity plan for disaster recovery. Having complete documentation enables the business areas to take a proactive approach when faced with upcoming system changes or unexpected work disruptions. It also equips business areas with any data points required for routine internal or external audits.

Deployment Pitfalls to Avoid

There are some specific areas of concern when it comes to RPA.

- Communication: Provide clarity to business areas about what RPA can and cannot do, and what processes fit best with this technology. Without an accurate understanding of the capabilities of RPA, there will be an influx of unsuitable requests for this new technology and, as a result, many disappointed business areas and wasted effort spent putting together their business case. At Edward Jones, the most common misunderstanding was regarding the lack of reading ability for the specific RPA vendor being used. While the bots can recognize characters in static fields, they are not able to interpret characters in an unstructured context. This ruled out many initial RPA requests. Additionally, while comparing RPA to macros was initially an effective way to explain the technology to business leaders that were not knowledgeable about technology development, this comparison created an unfortunate misconception that coding and implementing bots was as fast and easy as creating a macro. Business areas were not expecting development time to take four to six months for what they perceived to be a simple request.

- Change Management: Incorporate thoughtful change management throughout the deployment at all levels of the organization. Leveraging bots will take away manual tasks being completed by employees. Some employees may welcome the automation of monotonous tasks, but others may view this technology as a threat to job security. Supervisors will need to adapt and grow their skills to include oversight of the RPA technology. Strong people leaders often don’t have the same level of competency in the technical space, and they will need to quickly increase knowledge and skill to effectively manage their automated processes. Senior/C-suite leaders will need to consider the inherent risks associated with using RPA, the infrastructure and skills needed to support an RPA program, and how to obtain the needed resources and talent.

- Human Resources: Bots may create job redundancy, creating the potential for job loss reassignment. Engage human resources early to navigate these situations.

- Governance: Balance senior leader involvement so they feel comfortable with automation without extra levels of required approvals that slow the development process down.

- Don’t Force a Problem to Fit the Solution: RPA is not the right solution for every bad process. In the early phase of bot deployment, it is easy to let excitement about the new technology lead to poor choices around when to apply RPA. This leads to disappointing results that could undermine the entire bot deployment. Identify clear criteria regarding when bots are an appropriate solution and use a disciplined approach to evaluate each new process improvement opportunity. Consider non-bot solutions before a final decision is reached.

- Vendor Approvals: Any third-party vendors must permit bots to interface with their systems. Review vendor contracts or have new contracts signed to ensure bots are legally allowed to interact with vendor systems and web sites before beginning development.

- Resource Constraints: Set clear expectations with business areas about the work involved and resources needed to design and implement an RPA solution. The quality team and technical developers do not have the knowledge required about the specific processing steps to complete this work without a subject-matter expert from the business area being heavily involved throughout the project life cycle.

- Results: Heavy focus on capacity savings only tells part of the story. Identify other meaningful methods of communicating value from RPA implementation, such as risk reduction, faster cycle time, improved client experience or increased accuracy.

Case Study: Automating Retirement Disbursements to Charities

An example of an RPA implementation at Edward Jones involves the process of receiving, validating and executing on client requests to send monetary donations from qualified retirement accounts to charitable organizations. Prior to implementing the bot, the Qualified Charitable Distribution (QCD) process required 11 hours of manpower each day to get through the volume of donations – and the number of requests had been doubling each month.

The process had five to 10 errors monthly due to the manual data entry required, which in turn took one to three hours of leader or senior processor time to resolve. A bot was designed and implemented that would validate the original request (quality check) and then enter the appropriate data into a computer screen to issue the check to the selected charity.

Stakeholder Analysis and SIPOC

After the project charter was created and agreed upon by the project Champion and project team, a stakeholder analysis was conducted to identify any additional individuals or business areas that were upstream or downstream of the process or might be affected by a change to the process. These parties were consulted or communicated with throughout the effort to ensure process impacts were understood and considered as the automation opportunity was identified and designed.

Next, a SIPOC matrix was created to understand all the process inputs, including systems, data files and end users. Together, the stakeholder analysis and SIPOC are essential in ensuring all critical components of the process upstream and downstream are identified early in the automation effort so no processing gaps are created during RPA development.

| SIPOC Analysis: SIPOC for the QCD Automation Project | ||||

| Supplier | Inputs | Process | Outputs | Customer |

| Client, branch team | Clilent instructions, intranet form message | Branch team sends form message with client instructions for QCD | Unexcuted client request in the retirement department queue | Retirement support team |

| Retirement support team | Form message, client account information, IRS rules, client request | Retirement associate reviews client request for QCD to confirm eligibility | Validated client request | Retirement support team |

| Retirement support team | Validated client request | Issue check | Executed request, issued check | Client, branch team |

| Retirement support team | Client request, issued check | Close client request on system | Completed client request for QCD | Client, branch team |

Current- and Future-State Process Maps

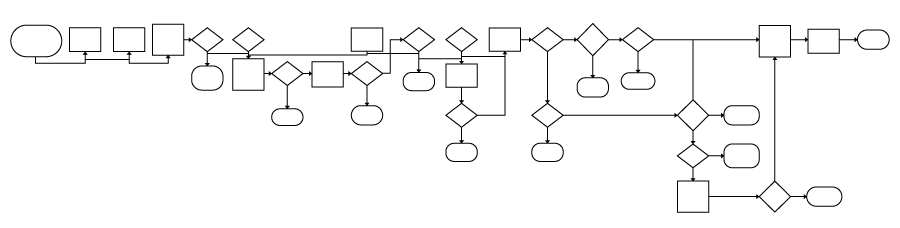

The next step was to create detailed current- and future-state process maps. The current-state process map must include enough detail to highlight all the data sources required by the process, and where that data must be entered to move the process forward. The future-state map must incorporate all of those critical points, while also accounting for the limitations of RPA technology (inability to “read”) and the advantages of RPA (directly ingesting data files, speed and accuracy).

For the QCD process, the client verification step needed to be handled differently for RPA than in the original process. Previously, an employee was comparing client names between the original client request and the account registration referenced in the request to ensure a match. Names can be difficult for RPA to match because the technology doesn’t understand common nicknames that might be used interchangeably with legal names. For example, “Bill” and “William” would flag as a mismatch by the robotic technology, while a human processor would recognize those as referring to the same individual. To avoid large numbers of false positives from the bot flagging mismatches caused by nicknames, an alternative form of identification matching was used, in this case a social security number.

In a typical Six Sigma effort, the goal is to achieve a more streamlined future-state process map with less processing steps and fewer decision points. One key difference between process maps for an RPA effort compared to a more typical Six Sigma improvement effort is that the future-state process maps may contain more, not fewer, steps and decision points. This is normal and shows that the automation capability is being fully utilized to provide a higher level of accuracy. Since the bot processes at a speed much faster than a human can achieve, these additional quality checks do not add to the overall process cycle time. Each decision point with RPA represents a quality assurance checkpoint, allowing for the final output to have higher accuracy than the original process achieved.

Risk Assessment

Once the future automated state has been identified, conduct a risk assessment to understand the risks associated with the current process and how the process risks may be affected by RPA. The largest risk associated with the QCD process was the manual nature of the process and likelihood of human error. This risk was eliminated by using bots.

However, automation adds different types of risks, including system failures and coding errors. By identifying potential risks and using control reports to quickly identify and remediate issues, these risks can be effectively managed.

Business Continuity Plan

The final element of the process review is a business continuity plan, specifically focused on failure of RPA to successfully perform the programmed tasks. Consideration should be given to a failure of the bot itself but also any underlying systems that the bot needs to interact with to obtain data or execute requests. Planning should include how to perform the work if the automation is not operational for a particular timespan as well as how to identify and resolve errors made by the bot if the programming becomes corrupted.

Through this planning exercise, a critical aspect of the QCD process was identified that may have led to future bot failure had it not been remedied. Volumes for this highly seasonal process rise drastically at year end, and a single bot was unlikely to keep up with the work at this peak. Programmers were able to proactively solve this issue by diverting process volume onto three separate bots to stay on top of the surge of work during these high-volume time periods.

Results

The QCD bot was implemented in September 2019 and immediately realized 11 hours of capacity savings with no errors. The total project cycle time from the initial continuous improvement analysis, through the bot design, development, testing and implementation took seven months. Since implementing RPA on this process, 100 percent of the process has been automated with zero errors. Process risk was reduced by one point on a 10-point scale by eliminating human error from manual work steps.

During routine follow-up six months after bot implementation, the project team learned that the benefits received from the automation had grown significantly. The volume of client requests for charitable distributions had increased rapidly, so the bot was now performing work that would have taken 34 hours – or five employees – to complete each day.

Conclusion

Don’t short cut the methodology when leveraging RPA and other new technologies. Technology masks a bad process, so clean up the underlying work steps first to maximize the benefit of RPA.